3D Computer Vision Software Improves Environmental Insights

Automation is growing in a range of applications and industries. This is due in large part to the labor challenges many industries are facing – automation can help to make work easier as well as complete tasks for which it is no longer possible to find people, allowing companies to remain productive.

Software is a key component of automation, helping to give directions on how something should move as well as interpreting the world around an automated vehicle or machine. The latter is especially important to ensure a safe, efficient and productive operation.

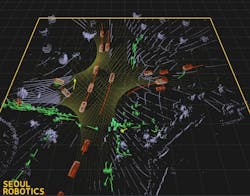

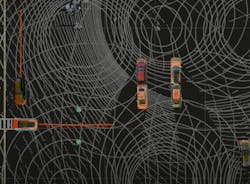

Seoul Robotics is among the companies developing software aimed at improving perception of environments for autonomy and a range of other applications. It specializes in three-dimensional (3D) computer vision software which can provide a more accurate depiction of an environment.

Power & Motion spoke with William Muller, Vice President of Business Development at Seoul Robotics, about the company’s latest technology release, SENSR 3.0, and the benefits of 3D computer vision software.

*Editor’s Note: Questions and responses have been edited for clarity.

Power & Motion (P&M): To start, could you provide some background on Seoul Robotics and the technologies it develops?

William Muller (WM): Seoul Robotics was founded in 2017. We are a 3D computer vision software company. We are basically an enabler of 3D data into different vertical use cases. Think of us as the software piece to a computer – the computer is a motherboard, the processor, but without the software doing something with that piece of hardware [the computer], it’s not of much use; that's what we are. We look at 3D sensor data and we ingest that data, process that data and then output information based on what we see within that data using 3D computer vision or deep learning techniques within our software.

Once we've analyzed that data, we basically output four types of classifications – a vehicle, a bike, a pedestrian, and a miscellaneous object, something that we haven't got a bucket for, we just know it's another object that does not fit into a specific class form. Each of those objects is perceived in a full three-dimensional world. We know where the physical location is versus two-dimensional [where] you can't really tell the distance of the object [and] where it might sit. In three dimension you know the distance and the location of that object, you know the size, speed, velocity trajectory, its orientation…that results in a much higher level of accuracy of information. That data-rich information is a lot more usable for a vast variety of use cases [and] applications.

READ MORE: Software Brings Efficiency Gains and Ease of Use

That's kind of the core, the elevator pitch of what we do with data. We are an NVIDIA partner; we leverage NVIDIA technology for our deep learning. All of our deep learning technologies are developed in house, so we trained our own models, trained our own data; we didn't use two-dimensional data and try to re-engineer it or anything like that. Everything is pure, three-dimensional data that we're utilizing within our applications.

P&M: The company recently introduced its SENSR 3.0 perception software – could you provide an overview of this technology – how it works, what benefits it offers?

WM: SENSR 3.0 is the big step forward we’ve taken for becoming a mainstream, commercialized product that is scalable. There is quite a bit of technology out there that is in cool demo phases but is highly intensive to deploy and has a lot of complexities. What Seoul Robotics has always focused on from the beginning is how can we move this technology into the mainstream, how do we make it a scalable technology and easily adaptable within different verticals.

That’s what SENSR 3.0 is – we’ve taken all that highly sophisticated technology and positioned it in a way that it’s usable in all these verticals. We try to remove a lot of the complexities…a lot of things that could potentially [cause] confusion and allow it to be essentially a ‘rinse and repeat’ product – [it’s] not just going into one deployment but hundreds and thousands of deployments and getting the same results, the same outcomes every time.

Some big improvements we’ve done is something called quick tune which is essentially a process that allows us to do easy calibration of the sensors because as you can imagine, you look at a two-dimensional picture and it’s easy to say what you’re looking at – I’m looking at a pole, a light or a street. In three-dimensional data, you’re seeing point cloud objects which are sometimes confusing to the human eye when you’re not used to it. What we’ve done is we look for things that are common and within a field; so if we see a square object [and] use that as a reference point to do calibrations and use that as another reference point to do alignments and so forth. Again, it’s taking things that are easier within the technology and using that as a way to tune it and set it up a lot faster.

Additional features [include] a more scaled, distributed architecture design, allowing more edge power. There is a lot of effort in optimization for being able to run on virtual environments. That also allows for a lot of quick and scalable deployments.

The calibration process is, even in two-dimensional worlds, extremely tough especially with cameras [because] you have to consider day and night, rain, fog; every one of those environmental conditions has an impact on how the system performs when you’re running analytics or deep learning algorithms on it. It’s similar in the three-dimensional world too, the only difference is you’re not dealing with pixels, you’re dealing with points. And for us we have a lot more tolerance towards those environmental conditions compared to two-dimensional analytics where you’re not seeing a lot of those image variances, but we still have to be mindful of the calibration of it.

That’s essentially the biggest push for SENSR 3.0, [easier calibration] – an engineer can go to the side of the road [or] a shopping center and it is easy for them to set this up, walk away and know it’s going to work and perform in a certain manner.

There is more advanced scalability [and the ability to add] more systems. You can really mix and match the system based on the customer requirements. We’ve moved on to the next level of operating platform…we run on Ubuntu [an open-source Linux operating system]. Most engineering entities enjoy that platform because they [often] have other applications running on a Linux-based platform. We run that same platform but in our own environment.

The other major benefit of SENSR 3.0 is how we backup and recover information. Once you set up design projects, you’re able to save and restore them. That allows you to be able to recover very quickly from a disaster or someone has run over a pole. To recover from that event, you simply import the saved information and you’re back up and running. There are also some tamper functions such as automatic detection if the sensor goes out of view or if someone physically obstructs it. In three-dimensional data technology it’s kind of a big deal because it’s not that easy to achieve that with the technology. [Having these capabilities] is part of what makes it a commercialized product versus a cool demo [of what could be done].

P&M: What makes SENSR 3.0 different or unique from other perception software in the market, or even from previous versions of it?

WM: Our biggest differentiator compared to anybody else out there is the three-dimensional deep learning aspect. It’s data rich; essentially the more data we ingest into the system the better it becomes. Unlike rule-based systems where you say ‘if this happens then that happens’ and that’s the outcome, with [SENSR 3.0] the more data it gets the stronger it gets. I wouldn’t say the more intelligent it gets but basically the more stable it becomes and the more advanced it becomes with a data-driven system.

But that’s our biggest differentiator that we’re using deep learning and 3D data. Also, that we are a 3D sensor agnostic platform. We can mix and match many different major manufacturers’ sensors in the same environment. In the world of lidar there are different types of sensors such as 360 rotational, forward facing and hemispheric top. We are able to fuse those all together very easily with our quick tune function in the same world and the operator doesn’t know the difference of what sensors are being used; the scan patterns are a little different but the objects passing through the fields of view are seamless.

Think of an airport terminal – you arrive and have check in, security, the gate – we have the ability to fuse information about all of those points at the airport seamlessly into the system. Each of those areas, or data, get brought together and we can continually see movements of an individual on their journey through the airport. The purpose of that will be to improve service, to improve flow of people and queue management, things like that. That is a big difference because that is super hard to do with video. With us it’s very easy to see that entire world and it’s fully three-dimensional too. The other thing is there is no biometric data; we don’t know who you are. We know you are human but we can’t tell if you’re male or female, we don’t know any of that information so your identity is protected. But we have the ability to have high levels of accuracy to allow services to be improved without upsetting people [who may not want to be recorded]. This is a good alternative technology to enable service improvements and understanding data you’re looking at but without compromising people’s data.

P&M: What sort of applications can SENSR 3.0 be applied in?

WM: We’re seeing very interesting verticals; every day something new actually. But where we find a lot of attention right now is in the intelligent transportation space, in smart infrastructure. I think the market is ready for some new technology, especially to provide insights regarding vulnerable road users which are cyclists and pedestrians. There hasn't really been reliable technology to solve those problems and there are big government drives to reduce fatalities for pedestrians and cyclists in cities, especially with the move to be greener. So that's a very good fit for the technology – to understand, improve and be more proactive versus reactive within those environments.

I’d say that’s our number one vertical today followed by smart spaces which are the airports and train stations where you’ve got lots of people and you're trying to improve service, you're trying to plan it better to reduce the frustration of your customer or your passenger. We have active deployments in over 13 major international airports. That was actually one of the first spaces we have been able to scale his technology.

READ MORE: Perception Systems Guide the Path to a Fully Autonomous Tractor

If it's applicable in air travel and train stations, it could be applicable in universities in schools; those are some other places it could go into. We also find the technology going into security type applications where, again, people want to feel safe, but they also don't want [their] biometric data [shared] so you have to figure out a way to still provide a service, protect people in in a proactive way and this technology is finding its place in that space.

We're getting into some industrial applications too where we're making smart factories. More on the safety side, looking at a full warehouse with forklifts and people and trying to avoid those conflict zones between [people and forklifts]. Our data and technology was born from autonomous vehicles, that’s actually the core technology behind everything we have. In some ways, the language and format as what is required for connected and autonomous vehicles [is the same as for smart infrastructure]. If you think about 10-15 years from now, if this data is being deployed within smart infrastructure it’s potentially usable for connected and autonomous vehicles to allow them to see further, allow their advanced safety systems function even better.

It's almost like it’s the building block that’s required first before we actually put mainstream autonomy on the streets because this is going to give them additional eyes. There is a lot of back and forth about whether it should be on the vehicle or the infrastructure. I believe it should be on both because one helps the other and vice versa. So we are seeing that this is an important step to take right now to allow that to happen.

The Level 5 Control Tower is the result in a controlled environment. Ultimately, it’s all about the data and the richness of the data. [In all use cases] everybody is after the data and how that data is going to solve current and future problems.

P&M: Since you touched on it, could you talk about the Level 5 Control Tower technology and how SENSR 3.0 fits in with it?

WM: There is a share of core software between SENSR 3.0 and Level 5 Control Tower. Level 5 Control Tower is its own platform, but it does share some pieces with, as I mentioned, SENSR 3.0. It's kind of the next step – SENSR 3.0 is now and Level 5 Control Tower is the future.

Level 5 Control Tower is turning autonomy on its head a little bit by saying not every vehicle or every mobile device that potentially comes out is going to be equipped to be autonomous capable. It might not have the sensors on board, it might not have everything that’s required, or it might be at a certain level that does not allow that to happen. What we do is offer an infrastructure, right now a private infrastructure – such as at an automotive manufacturing facility – to automate where there may be vehicles at different levels of manufacture that can drive but are not outfitted to be fully autonomous.

We look at the whole infrastructure – we know where all the people are, the vehicles, the parking spots, we know everything in that infrastructure – and in real time are able to drive a vehicle from point A to point B and understand where it is [in that manufacturing environment]. In our BMW use case, [we’re automating movement of] those vehicles coming off the production line. A person typically needs to park them and do so in an organized manner as well as know everything else around them.

No sensors are added to the vehicles, they are relying on us as their eyes to go to a designated parking spot. The vehicles get directions of where to go and are sent on their way. If a person is in the way the vehicle stops and waits until it’s safe to move again. It’s a safety aspect as well as a productivity enhancement. As you can imagine, there is a huge labor shortage in the world. This is another way to continue to provide the consumer with product without the stresses of not having people to do the work.

This is interesting because [some might] automatically think this technology is going after people's jobs. It's not the case; there's nobody to do the job, that’s the problem. As a society we want to produce more product, but there are not enough people to actually do the work.

So that's what Level 5 Control Tower does, it puts all the perception onto the infrastructure. It's an initial bigger investment, but once in place [the manufacturer] can then control hundreds of vehicles through that facility [and] know where they are and where they're going. And it's proven; it's been running for 2 years now. The idea is obviously for it to scale up.

From a [SAE] Level five autonomy point of view, not everybody is [necessarily] going to have Seoul Robotics’ Level 5 Control Tower platform but if we have the data available, and we understand that world, which we do already, we can potentially make that data available to other Level five capable technologies that wish to understand infrastructure-based data. Think about a vehicle that's autonomous – if it makes a left turn, it might be blind because sensors can only see so much with their line of sight. But if it notices from a smart infrastructure point of view what's around that corner, whether it's a pedestrian, cyclist or another vehicle, [the autonomous vehicle] can navigate that turn safer.

P&M: How does the company foresee sensor and software technology progressing for the purposes of automation in the coming years?

WM: I feel that it’s extremely important for, especially with how fast technology is moving, any organization or business to focus more on the software level of things, the actual enabler, the connector. The reason I say that is the hardware side of things is changing and evolving so rapidly and the last thing you want to do is find yourself investing or boxed into proprietary technology that is being passed on a daily basis by another technology. Software can always evolve, it can always adapt, it can always keep improving. The hardware it lives on, if it’s an open architecture, allows it to also move with the progression of the hardware.

That’s our vision at Seoul Robotics – we know there’s going to be the next greatest supercomputer, there’s going to be the next best sensor coming out, we want to position ourselves in a way that whatever customer invests in Seoul Robotics technology we are future proofing it. We are allowing customers to grab that next generation of technology and not throw away what you have but add on to what you have, continue building and evolving instead of ripping everything out and starting fresh. Because I think what that does is slows down the whole progression.

I feel that is an important [aspect] people don’t think about, especially from a hardware company point of view. You’re hyper focused on selling the component – you can touch it, feel it, interact with it, it’s a lot easier for people to get attached to. Software is not a physical something, it’s hard for people to understand. When talking to customers and they talk about what a hardware can do, I say ‘yes, hardware is fantastic but what do you do with it? What is it’s use?’ Something has to enable it, make it do something and give you information that you can use with it. That’s where software companies are so important because they are that enabler; they are that conduit that says ‘I’m going to take your information, I’m going to understand the use case requirements, bring it together and deliver you something that is solving a problem. And from that point on I’m putting you in a position to allow you to keep modernizing as technology evolves.’

If you think about during the [COVID-19] pandemic, there was such a shortage of components and hardware. You can imagine, if you’re tied into proprietary technology and something like that happens again, you’re completely crippled by the fact that you cannot access that technology. Whereas we didn’t suffer from that because we were able to get technology that was available and continue allowing customers to progress.

About the Author

Sara Jensen

Executive Editor, Power & Motion

Sara Jensen is executive editor of Power & Motion, directing expanded coverage into the modern fluid power space, as well as mechatronic and smart technologies. She has over 15 years of publishing experience. Prior to Power & Motion she spent 11 years with a trade publication for engineers of heavy-duty equipment, the last 3 of which were as the editor and brand lead. Over the course of her time in the B2B industry, Sara has gained an extensive knowledge of various heavy-duty equipment industries — including construction, agriculture, mining and on-road trucks —along with the systems and market trends which impact them such as fluid power and electronic motion control technologies.

You can follow Sara and Power & Motion via the following social media handles:

X (formerly Twitter): @TechnlgyEditor and @PowerMotionTech

LinkedIn: @SaraJensen and @Power&Motion

Facebook: @PowerMotionTech