Emerging Sensor Design Requirements for Embedded Vision

Sensors play an important role in enabling automated systems and autonomous vehicles, working together with vision systems to help detect a vehicle or machine's surroundings. As the capabilities of automation evolve, so too do design requirements for sensors to allow for better visibility of surroundings.

According to IDTechEx, use of embedded vision systems is on the rise to enable greater levels of autonomy in robotics, vehicles and industrial applications, among others. These systems will bring about the need for new sensor types and designs to enable improved detection, processing and analysis. This will help to enhance the capabilities of automated systems and vehicles while ensuring they operate in a safer and more productive manner.

In its report "Emerging Image Sensor Technologies 2023-2033: Applications and Markets," IDTechEx outlines the various sensor types entering the market to aid with automation, including those for use with embedded vision systems such as spectral imaging and event-based vision.

What is Embedded Vision?

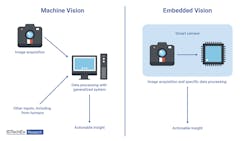

Sensors collect and transmit data about their surroundings which can be used by vision systems for various application requirements, such as detecting objects or guiding movements. Typically the information from the sensor is sent to a central processor for analysis.

However, embedded vision systems enable initial analysis of collected data to be performed adjacent to the sensor or on the 'edge'. This is often done using a dedicated, application-specific processor explained IDTechEx in its press release announcing its sensor technology report.

As such, data transmission requirements can be reduced because only the pertinent information, i.e. conclusions made based on the data analysis (such as object detection), are transmitted instead of sending all acquired information as is typically the process. Because there is less data to transmit, results and thus the automated system or vehicle response can be quicker.

Vision systems are an important part of advanced driver assistance systems (ADAS) as well as mobile robots and automated industrial processes such as conveying. Their ability to quickly detect and determine appropriate actions are critical to ensuring safety and productivity.

This will be especially important as industries like passenger cars and trucking move closer toward fully autonomous vehicle operation where safety is the number one concern for both drivers and others in the vicinity of a vehicle.

READ MORE - Sensors and Software in Motion Control: Key Benefits to Consider

New Sensor Design Requirements

Use of embedded vision will bring new design priorities to the forefront for sensors. IDTechEx said performance metrics such as resolution and dynamic range are typically a priority for image sensing applications but embedded vision systems have other aspects on which sensor designs need to focus.

Reduced Weight, Size and Power

While there is a need for good data collection and image processing from the sensor, there is no picture to be seen from the data like in other applications. Instead of maximizing image quality, IDTechEx said it is more important for embedded vision system sensors to be lightweight and compact in size. Power requirements can be minimized as well.

Reducing size, weight and power (SWAP) allows for use of more sensors in applications with size and weight constraints such as drones. IDTechEx said small image sensors, such as those originally developed for smartphones, will likely be used for embedded vision systems. There will also be integration advancements over time which could allow for stacking of the sensing and processing functionalities, further aiding with the desire for a compact sensor design.

Opportunities for Use of Other Sensor Types

Because embedded vision does not require the same image processing as other vision system applications, it may be possible to use sensors other than the conventional versions currently utilized in the market. Image sensors which detect RGB pixels in the visible range, for instance, may not be necessary and instead monochromatic sensing options could be used for simpler algorithms such as edge detection for object location explained IDTechEx. This can help to reduce system design costs as these types of sensors are not as expensive.

However, the research firm also notes there could be an argument made for using more sophisticated sensors with more capabilities as they could help reduce the need for subsequent processing, a key benefit of embedded vision systems.

The addition of spectral resolution to image sensors is one of the ways IDTechEx notes this could be achieved for applications such as material identification because there is typically a smaller training data set required. However, current spectral sensor designs have a hard time meeting embedded vision system SWAP requirements. These sensors often have a bulky architecture due to the housing of diffractive optics.

New technology developments aim to overcome this challenge such as:

- MEMS (micro electromechanical systems) spectrometers,

- increasing optical path length using photonic chips, and

- adding multiple narrow bandwidth spectral filters using conventional semiconductor manufacturing techniques.

Event-based vision is another sensing technology IDTechEx points to which could benefit embedded vision systems. As the research firm explained in its press release, each pixel reports timestamps that correspond to intensity changes instead of acquiring images at a constant frame rate as is typically the case with other sensor solutions. By combining better temporal resolution of rapidly changing regions with less data from static background regions, data transfer and subsequent processing requirements can be reduced explained IDTechEx.

Each pixel is able to act independently as well which helps to increase the dynamic range of the sensor. And because data collection and processing is happening within the sensing chip, the embedded vision system design can be simplified as there is less data to handle.

As implementation of automation in its various forms continues to grow, so too will the amount of sensor types and vision system options to enable the creation of safe, efficient and productive applications.

READ MORE - Opportunities and Challenges for Fluid Power in Automation

About the Author

Sara Jensen

Executive Editor, Power & Motion

Sara Jensen is executive editor of Power & Motion, directing expanded coverage into the modern fluid power space, as well as mechatronic and smart technologies. She has over 15 years of publishing experience. Prior to Power & Motion she spent 11 years with a trade publication for engineers of heavy-duty equipment, the last 3 of which were as the editor and brand lead. Over the course of her time in the B2B industry, Sara has gained an extensive knowledge of various heavy-duty equipment industries — including construction, agriculture, mining and on-road trucks —along with the systems and market trends which impact them such as fluid power and electronic motion control technologies.

You can follow Sara and Power & Motion via the following social media handles:

X (formerly Twitter): @TechnlgyEditor and @PowerMotionTech

LinkedIn: @SaraJensen and @Power&Motion

Facebook: @PowerMotionTech

Leaders relevant to this article: